This nodal synth project is based on a false memory. I was discussing false memories with friends last night and reflecting on some of my own. I woke up the next day still thinking about this. I often think about something that my friend Ben had shown me years ago, I have this strong memory of connected objects like a circuit diagram or a mechanical network, and of it running like a program and making sound, and I have a distinct image of it which I am pleased to say I have recreated here, sadly its not sounding as good as i hoped but i am still working on it. At the time Ben was studying music and physics, maybe 1998ish. I had no idea what coding was at the time, but I understood mechanical systems and circuits. I loved feedback and pendulum-based oscillation, etc. So, it made sense to me to see this diagram, or what ever it was like that: as objects with functions, masses, frequencies, directions, and the exchange of energy within a system. Sound.

Many years later, I got the opportunity to learn about coding (2005ish) and these nodal modular programming systems (MAX/MSP and PureData), which made more sense to me than text-based code, and I ended up using PD and a go-to tool in my later practice. But i often think about this non-existent software, of which I have retained a seemingly vivid image for so many years. I woke up and went to P5.JS and AI to help me get started on making this software a reality. I chose not to research similar software, and I am sure there is some. I wanted to explore making my own memory a reality.

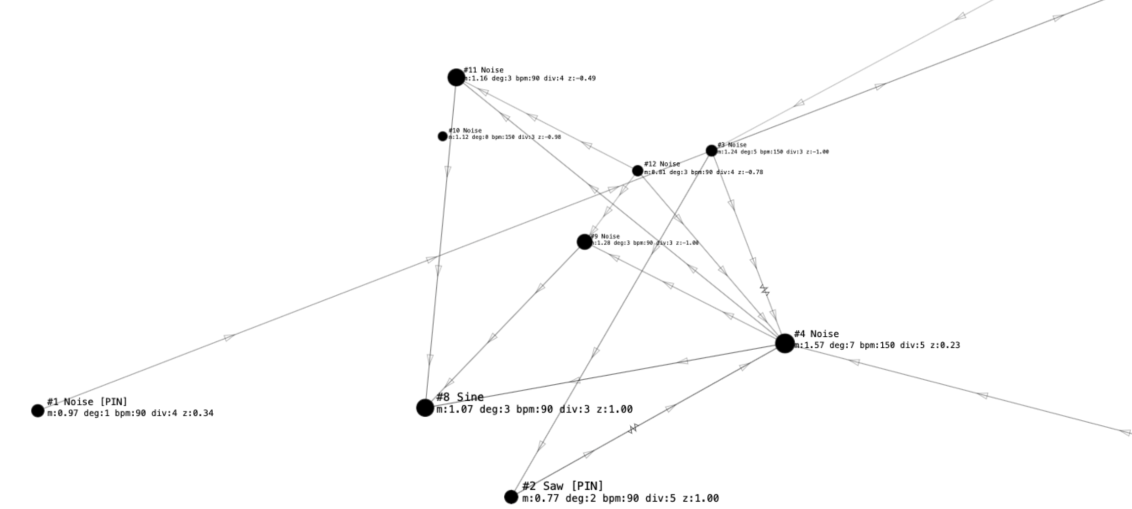

I described the instrument and the functionality I needed for the UI, and we quickly had a good prototype that looked almost exactly like the image in my head. With a few iterations, the graphical UI and its behaviours are nearly perfect (in terms of what I remember), the actual function of the objects and the resulting sound are the missing parts of my false memory which it was been interesting to explore. Here is the patch – you can use it here, upload sounds, See instructions on screen. Sound is not working here so try this link if you like… and see the code here: https://editor.p5js.org/tonythevortex/full/sIgNPvhgn

So I’m assuming or imagining that such a UI could be one of several instruments: a simple, reductive or additive synth, or something stranger, such as a granular synth. I went with Graunlar first (the one I was most excited about and least understood). Also, the granular-style synth would let me play around with uploading field recordings.

On this path, the nodes move according to a range of preset behaviours and can be programmed to generate sounds affected by their XY position and weight (mass, represented by size) (Z?)*.

When nodes are connected, they have a pendulum-like quality in relation to each other, floating in an idealised space.Vectors join to nodes and exhibit elasticity, creating oscillator effects in relation to other nodes. To give it a circuit-like effect, functions can be applied to vectors in-line, resistors, and resonators (springs), but I need to update this so it’s clear sonically how the ‘signal’ is transmitted along the vector and affects other nodes.

The problem is that each node generates sound. These could have a more summative/reductive effect on the signal, and other nodes could be chosen as outputs (DACs). But then this could end up looking more like a version of PureData.

(A note to say i recently confirled it must have been MAX MSP he showed me – but my memory was more like the synth i have created here)

What’s next for the nodal synth? From Granular to crystalline?

While I am happy with the UI in terms of look and functionality, the Granular synth is fun to use, but it needs to be more responsive and dynamic sonically. I need to add functions to adjust grain size and speed, retain this similar sound, and some of my functions make only the most subtle change.

Whilethis patch, while based on my false memory, relates to my other experiments with floating particles inspired by pond life. And a dream which led to me working with Dures Sold as a basisi for a musical instument. It will be interesting to change this patch to a more drone-based generator where the nodes affect microtones.

*I like the way that that this XYZ potentially gives a spatial positioning, meaning that the points and vectors collectively represent a 3-dimensional crystalline form. Adding a fix-all points (crystalisation mode) where buy nodes retain a fixed position in space. I used AI to render that image into reality.

Note: The above text was written by me (i hope that clear!) while this instrument (the code) was developed with the assistance of AI, and a lot of iterative prototyping and playtesting. I specified the interaction design and aesthetic goals, used AI to generate and refine p5.js/Web Audio code, then tested and directed changes and tweaked the code.

The following text is an AI Generated summary of the conversation and code developed:

Core concept A physics-driven node graph where: Dots = sound bodies (each dot is a sound source + clock) Lines = signal relationships (audio routing and modulation) Actors on lines = processing modules (delay / drive / spring comb / filter) Everything floats in pseudo-3D, so distances and motion continuously reshape timing + timbre.

Core concept:

“A physics-driven node graph where: Dots = sound bodies (each dot is a sound source + clock) Lines = signal relationships (audio routing and modulation) Actors on lines = processing modules (delay / drive / spring comb / filter) Everything floats in pseudo-3D, so distances and motion continuously reshape timing + timbre.”Development:

Concept seed: “A musical instrument for granular synthesis with a minimal vector-line UI: dots as sounds, lines as connections, circuit-like modules on wires, floating physics changing relationships.”

First prototype goal: “p5.js + Web Audio sketch with draggable nodes, connectable lines, basic granular playback from a buffer, and inline line actors (delay/filter/drive).”

Feature expansion: “Per-node labels and data readouts; assign different samples to different nodes; add clearer circuit symbols for line modules.”

Interaction fixes: “Fix delete/backspace behavior; improve selection and editing reliability; add slow mode for editing.”

Physics controls: “Mass/size coupling (big dot moves slower); pin nodes in space; increase repulsion in slow mode so nodes spread out.”

Clarity + sound upgrades: “Explain granular synthesis; make actors more legible with parameter readouts and editable ‘amount’; reduce runaway feedback.”

Musical direction: “Directional signal flow along connectors with arrows; add per-node clock + step sequencer editor for polyrhythms; add minimal glitch tick engine and node pulse visuals.”