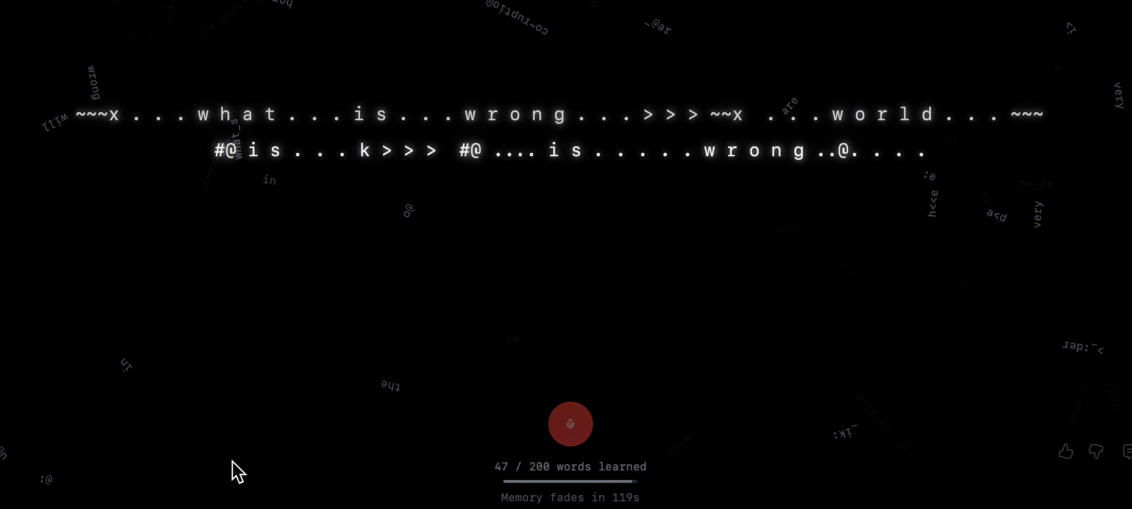

Plato’s Allegory of the Cave offers a metaphor for the limits of human perception. Plato describes a group of prisoners in a cave who know the outside world only through shadows projected on a wall in front of them. Similarly, LLMs don't learn about the world from direct experience, but from “shadows” in its training data: billions of sentences written by people, describing things, events, and our conversations about them. It is trained to predict the next word in those texts, not to build an accurate picture of reality. Jan Kulveit suggested that an LLM is like a ‘blind oracle in yet another cave, who only hears the prisoners conversations about what they see. I thought It would be interesting to simulate a version

Tag: AI

Carbon Cloud Chat

https://carbon-cloud-chat-21c0d88f.base44.app As a thought experiment, I used AI to develop a carbon-aware AI chatbot that actively discourages the use of AI and guides users toward lower-carbon alternatives, or encourages them not to use the AI at all. I have been working on several modules related to UX and sustainability in the digital arts this year, and the ecological impact of AI is a key concern. This is a work in progress, and I will use this app as a point of discussion in my lectures. I am aware of the meta irony here: I used AI (Basse44, with one primary design prompt and 20 further iterations, plus a few hours of testing) to build an AI that advises people not to overuse AI. That