I have been working with Manchester Science Partnerships to develop a range of workshops for their customers, the resident companies that use the park. The first session was the ‘mirror gaze experiment’. During the mirror gaze experiment [MGE] participants are asked to stare at their own reflection in a mirror in a nearly dark room. An outline of the head is visible as a faint silhouette. In this state of partial sensory deprivation, the brain struggles to make sense of the information it sees. Forms and shapes begin to emerge as if from nowhere. For many observers, these develop into vivid visual hallucinations “monsters, archetypical faces, faces of relatives, and animals” (Caputo, 2012; Bortolomasi et al., 2014). This phenomenon, known as the ‘Strange face illusion’ (Caputo, 2010) emerges through a complex interaction of physiological and cognitive processes and unconscious projection and can elicit a strong emotional response in participants.

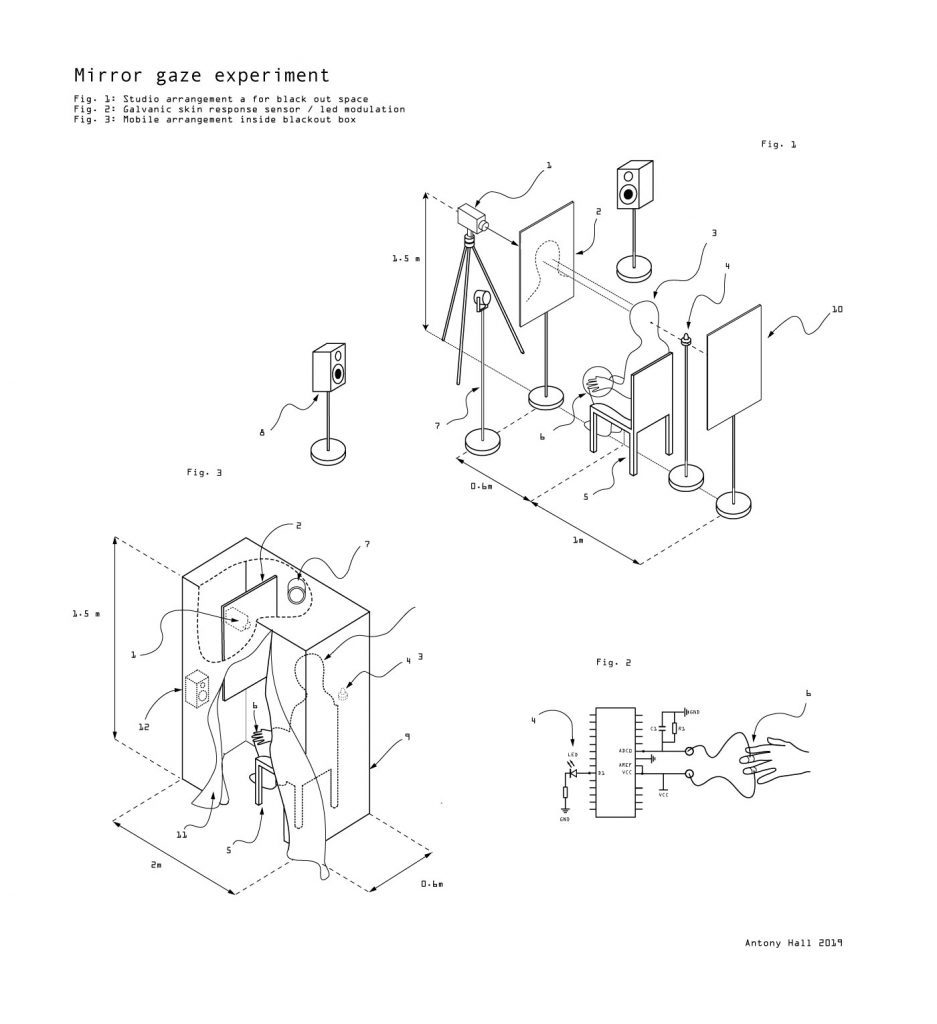

This was a re-staging of Giovanni B. Caputos original Mirror Gaze Test MGT (Caputo 2016) in which participants experience the ‘strange face illusion’ With the addition of generative sound and light responding to bio-feedback in real-time, and using a night vision video camera placed behind a two-way mirror. See the schematic here…

On arrival, participants were briefed before being seated inside the cubical. Once inside sensors were fitted to the fingers [to measure sweat response, a basic measure of emotional response]. They were given a button to press and instructed “If you notice a change in what you see then press the button and hold it down for as long as the change lasts” In line with Caputo’s original study. Outside, I started the stopwatch and the sound, gradually increasing the volume, attentively watching the screen with the live feed of the participant looking at their reflected self. When the participant started to experience the illusion, the light was illuminated on the desk. I manually made a note of the time and duration of these flashes, as well as each eye blink and any other significant movements of head or eyes. This was all video recorded for analysis later. After 10 mins, the sound is turned off and the participant is invited to come outside and discuss their experience. As with the original MGT, the interview begins with the question:

“What did you see? Participants gave detailed accounts of their experiences, which were recorded.

Additional probing questions were asked, if not already addressed based on my own investigation;

“Did you see any colours?”

“Did you see the faces of people other than your self?”

“Did you see an aura or glow?”

My experiment uses randomly flickering light directly behind the participant, which gives the appearance of an aura, however, not all participants report this as an observation and in several cases, while not using this flickering light, participants have report changes in light levels and the presence of an aura like glow.

“Did the sound effect what you were seeing?”

This related to the fact the sound is modulated by changed in skin moisture, participants note an emotional response, a rise in heart rate and breathing for example when they experience certain perceptions. In this experiment, this should trigger a subtle increase in sound intensity. However – participants only occasional comment on this as an observation.

Did you notice the blue light?

Did you notice the red light to your left?

The lights are artefacts present in the experiment which were brought to my attention through previous participants experiences. But many participants generally fail to perceive these during the experiment. Rather than try to remove them I have kept these in the experiment. Perhaps these highlight a process of neural adaption where colours and peripheral details seem to face from perception while fixating on an image, a phenomenon which also plays a role in the illusion. Participants are often surprised to reflect on the fact they either didn’t notice these or that they disappeared from their perception.

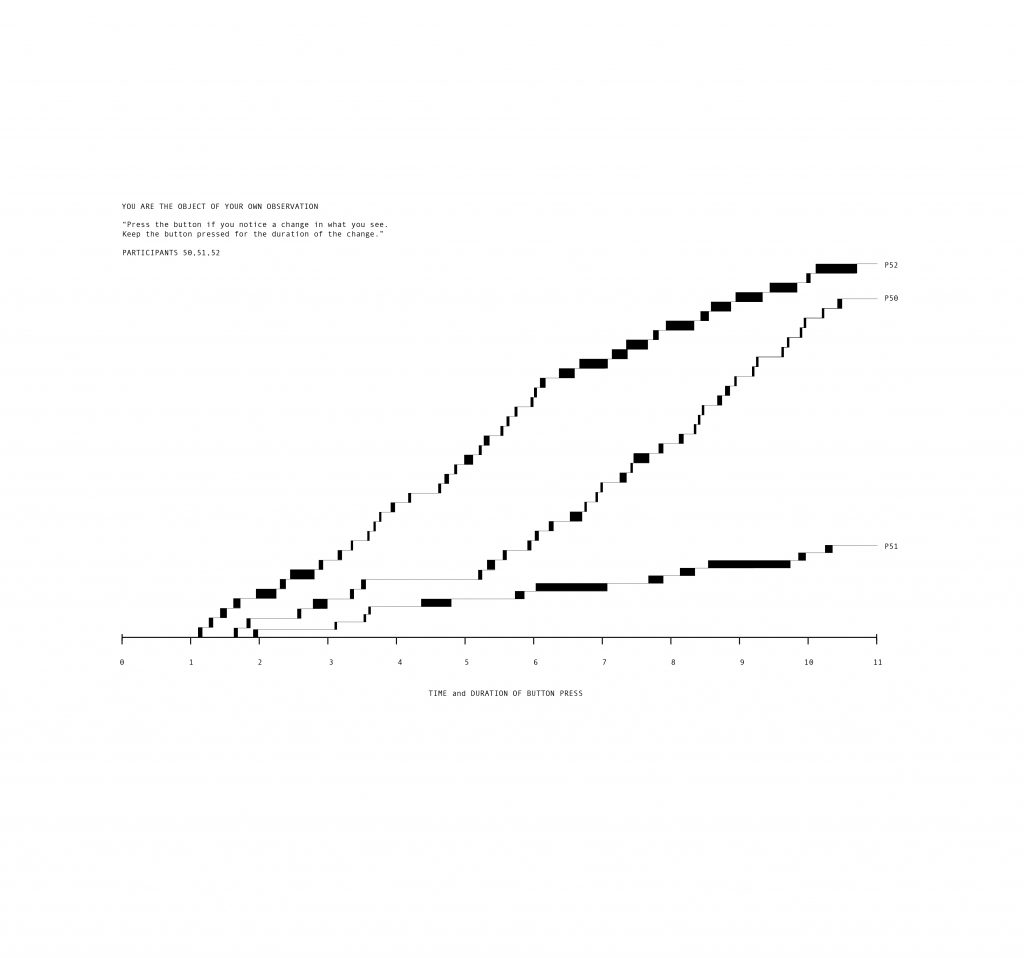

The button pressing activity

In the previous experiments, participants used a note pad to write down their experience as it happened. This, however, proved quite distracting and the resultant notes were not particularly enlightening, in comparison to the detailed accounts given in the post-experiment interviews. The button was connected to a red light placed on the desk. Rather than setting up a data logger, the ‘on’ durations were recorded manually while focussing on the video feed, counting blinks and facial movements, recording both to a timeline. This could easily have been automated, indeed it would have been more accurate, but as the experimenter, this demanded intensive mindful attention. Both actors now becoming participants in an experience.