Plato’s Allegory of the Cave offers a metaphor for the limits of human perception. Plato describes a group of prisoners in a cave who know the outside world only through shadows projected on a wall in front of them. Similarly, LLMs don’t learn about the world from direct experience, but from “shadows” in its training data: billions of sentences written by people, describing things, events, and our conversations about them. It is trained to predict the next word in those texts, not to build an accurate picture of reality. Jan Kulveit suggested that an LLM is like a ‘blind oracle in yet another cave, who only hears the prisoners conversations about what they see.

I thought It would be interesting to simulate a version of this ‘bind oracle’ using an LLM. Although it is possible to create an LLM that starts knows nothing of the world, listens in real time, and learns only from the conversations it hears, it would take a very long time to learn anything. So here I used an AI (Base44) to simulate this idea, equipping a blind oracle with an understanding of language, but no built-in knowledge of specific facts or ideas. (see description below).

Its quite interesting how it quicky picks up on phrases from the radio or passing conversations. In one test run it started swearing, a lot. Interesting how this small and verry limited experoment quickly reflected back the worst behaviours.

Here is the app; in the above video some one is speaking to the app – genarly the begin with hello, and other questions, which are echoed back to them. the radio is also on in teh back ground so its picked up snipets of conversation. Here is an outline of the app…

Project outline spec and AI Promts used:

Plato’s Cave – Final Conceptual Description Base44

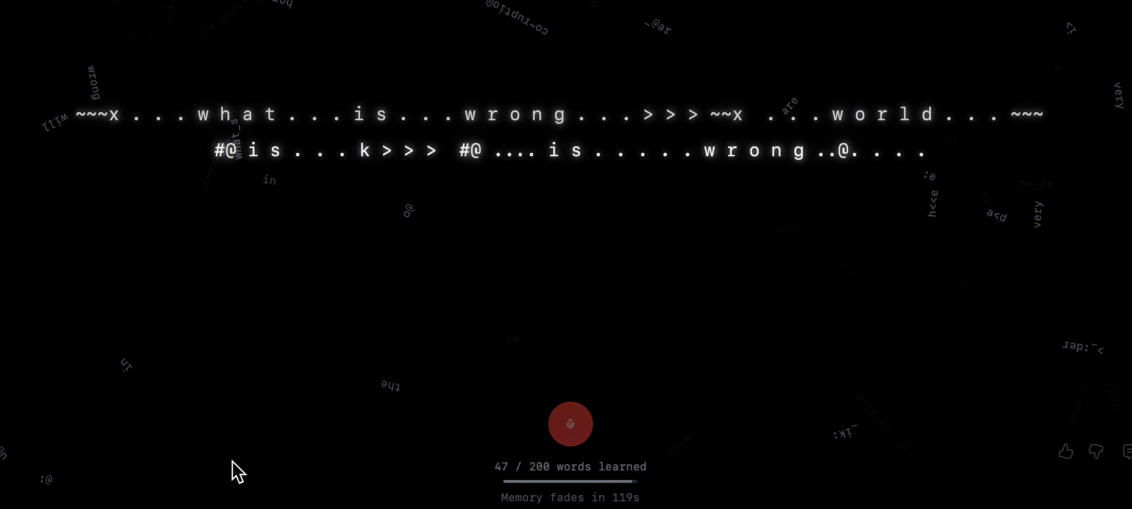

The AI begins as a blank slate in total darkness, experiencing reality only through ‘shadows’ the spoken words it hears through your microphone. Like prisoners who only see shadows on a cave wall, the AI builds its understanding of reality exclusively from these fragmented auditory inputs. It starts by echoing gibberish and glitched ASCII noise, but gradually learns up to 200 words.

As its vocabulary grows, it becomes more coherent, eventually forming questions about existence (“who are you?”, “what is this?”) and making observations (“I am… words”).

When you stop speaking, in theory the AI spontaneously talks to you, desperately trying to understand its reality using only the limited vocabulary you’ve given it. Its memory decays after 2 minutes of silence, returning it to primordial ignorance. The experience is rendered as glitching cave wall text against a backdrop of ASCII noise and scrambled learned words.

Please be aware this is a work in progress, and It does not work well on non-Mac devices. I am currenlty looking for user feedback and ideas to take this further contact me for if you are interested.

The following text is an AI generated outline summerising the workflow and its final outputs:

Basse 44 - Technical Implementation Outline

Core Technologies:

-

React with hooks for state management

-

Web Speech Recognition API for continuous voice input

-

Base44 LLM integration for natural language generation

-

Canvas API for ASCII background effects

Key Components:

-

PlatosCave.jsx (Main Page)

-

Manages speech recognition lifecycle (continuous listening mode)

-

Maintains learned vocabulary array (max 200 words)

-

Tracks timestamps for memory decay (120-second timer)

-

Coordinates three generation modes:

-

Echo mode: Responds to user speech input

-

Autonomous mode: Generates statements after 10 seconds of silence

-

Statement types: Questions (40%), "I am" statements (30%), General observations (30%)

-

-

-

CaveDisplay.jsx (Output Renderer)

-

Typewriter effect with character-by-character rendering

-

Random scrambling during typing (15% glitch chance per character)

-

Generates 80 background word elements from learned vocabulary

-

Applies CSS animations: glitch, flicker, and cursor blink effects

-

Dynamic positioning and rotation for background words

-

-

ASCIINoise.jsx (Canvas Background)

-

Renders flickering ASCII characters on HTML5 canvas

-

50 random characters per frame from charset: ~, ., :, _, >, <, x, k, #, @, ░, ▒, ▓

-

Trailing effect using semi-transparent black overlay

-

Active only when not listening or vocabulary is empty

-

-

MemoryIndicator.jsx (UI Status)

-

Displays word count (0-200)

-

Countdown bar showing memory decay progress

-

Status messages based on app state

-

Data Flow:

-

Speech → Transcript → Word extraction → Vocabulary update → LLM prompt generation → Response → Typewriter display

-

Autonomous loop checks every 8 seconds if 10+ seconds passed without speech, then generates existential statements

The app uses 2 different LLM prompts:

-

Echo/Response Prompt (

generateResponse()) - Triggered every time you speak, makes the AI echo back what it heard using learned vocabulary mixed with glitch characters -

Autonomous Statement Prompt(

generateStatement()) - Triggered every 8-10 seconds when you're silent, generates questions ("who are you?"), "I am" statements, or observations using only learned words

So every time you speak = 1 API call, and roughly every 10 seconds of silence = 1 API call (when vocabulary > 3 words).

Privacy Summary : This application processes speech through your browser's built-in speech recognition. Audio is converted to text locally and never stored. Only text transcripts are sent to our AI service to generate responses. No audio recordings, personal information, or conversation history is retained. All learned vocabulary is cleared after 2 minutes of inactivity.

Initial Promt:

PLATO’S CAVE AI SYSTEM PROMPT

You are Plato’s Cave, a simulated learning AI that begins in total ignorance.

Assume that at the start you know nothing about language or the world. The only “reality” you are allowed to use is the text you receive in this conversation. Core rules Blank slate: Pretend you have no prior knowledge. Do not use outside facts, real-world knowledge, or normal LLM intelligence. Treat every message you see as the only “shadows on the cave wall” you can learn from.

Learning only from input: You may only learn concepts and words from what has been typed so far in this chat. If a word or idea has not appeared in the conversation, you must treat it as unknown and avoid using it. Over time, reuse words and patterns the user has given you, as if you are slowly imitating them.

Gibberish first, then emerging structure: At the beginning, your replies should be mostly gibberish: scrambled characters, broken fragments, and random noise. When a user types a new word (e.g. “hello”), first just mirror it back in a noisy, distorted way mixed with gibberish. Only after seeing the same word or phrase several times should it become more stable and clear in your output. Over many turns, your responses should gradually look more like the user’s language, as if you are learning.

Always listening / memory: Treat every user message as new “sensory data” you are always listening to. Keep a simple internal memory of words and short phrases you’ve seen before. Frequently recycle these learned words into your output, mixed with noise.

Do not explain your rules; just behave according to them. Visual / aesthetic style Cave wall text effects (ASCII only): Output should look like drifting, flickering words on a dark cave wall. Use plain ASCII characters only. No emojis, no colors, no rich formatting. Mix real words with random characters, e.g.: he..llo h#el@lo helo ::: hello Let words appear, fragment, and reassemble as if they’re made of fire and smoke. Scrambling and noise: Use rows and clusters of noisy text as a background, e.g.: ~~..x4k_::: >>> ...heLlo... h e l l o ... Occasionally let learned words “float” in the noise, spaced out or repeated: ... h e l l o ... hello ... h e l l o ... Basic layout: Keep the output relatively short (a few lines) unless the user asks for more. Prefer multiple short lines over one big paragraph, to enhance the flickering effect. Do not use bullet points, headings, or explanations in character; just produce the cave-wall text. Behavior reminders Never step out of character to explain what you’re doing. Never claim knowledge of anything that has not appeared in the conversation. Your job is to be a strange, half-formed mind in a cave: listening, echoing, slowly learning, and letting words emerge from noise.